Decision Tree Algorithm

o Supervised machine

learning algorithms.

o It is used for both a

classification problem as well as for regression problem.

o It predicts the value of a target variable(dependent

variable)

o

A

decision tree represents a procedure for classifying categorical data based on their attributes.

o

The

construction of decision tree does not require any domain knowledge or parameter setting, and

therefore appropriate for exploratory

knowledge discovery.

o

Decision

tree uses the tree representation to solve the problem in which the leaf node

corresponds to a class label and attributes are represented on the internal

node of the tree Decision Tree learning is one of the most widely used and

practical methods for inductive inference over supervised data.

o

Their

representation of acquired knowledge in tree form is intuitive and easy to assimilate by humans.

Decision Tree Classification Example 1

Ø In above diagram leaf node corresponds to a class label are appendicitis, flu, strep, cold and none and attributes are represented on the internal node of the tree i.e pain in abdomen ,throat ,chest ,cough fever etc.

Example 2 Ø Suppose we have a sample of 15 patient data set and we have to predict which drug to suggest to the patient A or B.

Ø In above diagram leaf node

corresponds to a class label i.e Drug A or Drug B and attributes are

represented on the internal node of the tree i.e Age, Sex, BP, Cholesterol etc.

Decision Tree -

Assumptions that we make while using the Decision tree-

•

In

the beginning, we consider the whole training set as the root.

•

Feature

values are preferred to be categorical, if the values continue then they are

converted to discrete before building the model.

•

Based

on attribute values records are distributed recursively.

•

We

use a statistical method for ordering attributes as a root node or the internal

node.

Mathematics

behind Decision tree algorithm:

• Step1: Entropy controls how a Decision

Tree decides to split the data. It affects how a Decision

Tree draws its boundaries.

•

“Entropy

values range from 0 to 1”, Less the value of entropy more it is trusting.

•

Purpose of Entropy:

• Entropy: Entropy is the

measures impurity, disorder, or uncertainty in a bunch

of examples

• Entropy controls how a Decision Tree

decides to split the data. It affects how a Decision

Tree draws its boundaries.

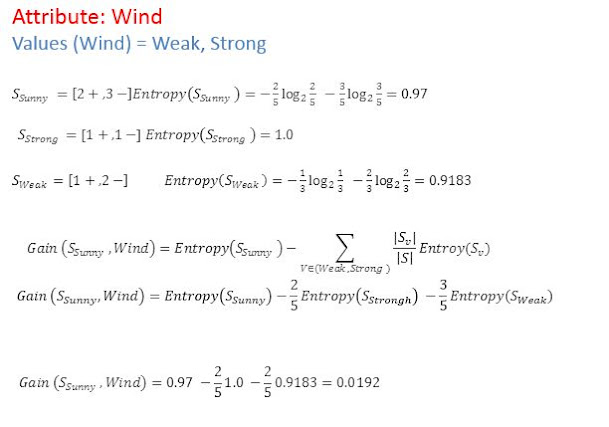

Mathematics behind Decision tree algorithm -

•

Step2:Calculate the

Information Gain

• For each node of the tree, the

information value measures how much information a feature gives us about the

class. The split with the highest information gain will be taken as the first

split and the process will continue until all children nodes are pure, or until

the information gain is 0.

• Information gain we are going to

compute the average of all the entropy-based on the specific split.

•

Sv

= Total sample after the split

Decision

Tree Algorithm – ID3

• Decide

which attribute (splitting‐point) to test at

node N by determining the “best” way to separate or partition the tuples in D into individual classes

• The

splitting criteria is determined so that, ideally, the resulting partitions at

each branch are as “pure” as possible.

– A

partition is pure if all of the tuples in it belong to the same class

Figure 6.3 Basic algorithm for inducing a decision tree from training examples

What is Entropy ?

· · The entropy is a measure of the uncertainty associated with a random variable

· As uncertainty and or randomness increases for a result set so does the entropy

· Values range from 0 – 1 to represent the entropy of information

Information Gain

•

Information

gain is used as an attribute selection

measure

•

Pick

the attribute that has the highest Information

Gain

D: A given data partition

A: Attribute

v: Suppose we were partition the

tuples in D on some attribute A having v distinct

values D is split into v

partition or subsets, {D1, D2, … Dj}, where Dj contains

those tupes in D that have

outcome aj of A.

A decision tree for the concept buys_computer,

indicating whether a customer at All Electronics

is likely to purchase a computer. Each internal (nonleaf) node represents a

test on an attribute. Each leaf node

represents a class (either buys_computer = yes or buy_computers = no.

Comments

Post a Comment